- java.lang.Object

-

- io.reactivex.rxjava3.parallel.ParallelFlowable<T>

-

- Type Parameters:

T- the value type

public abstract class ParallelFlowable<T> extends Object

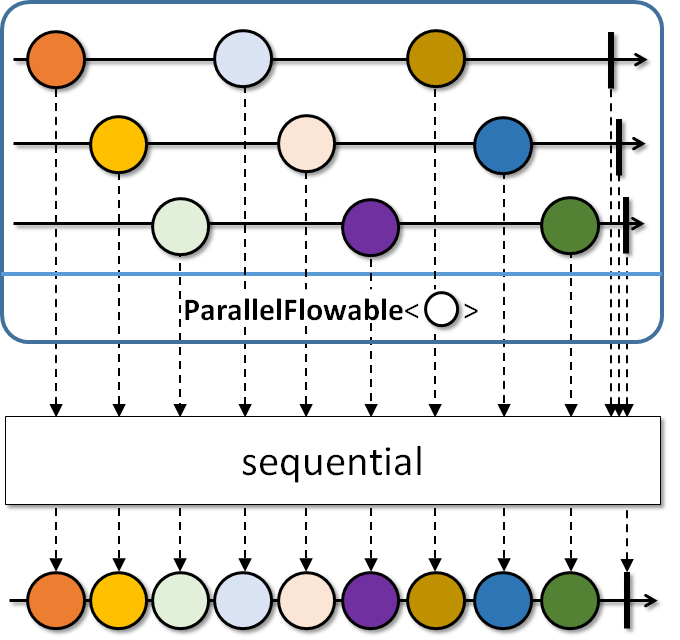

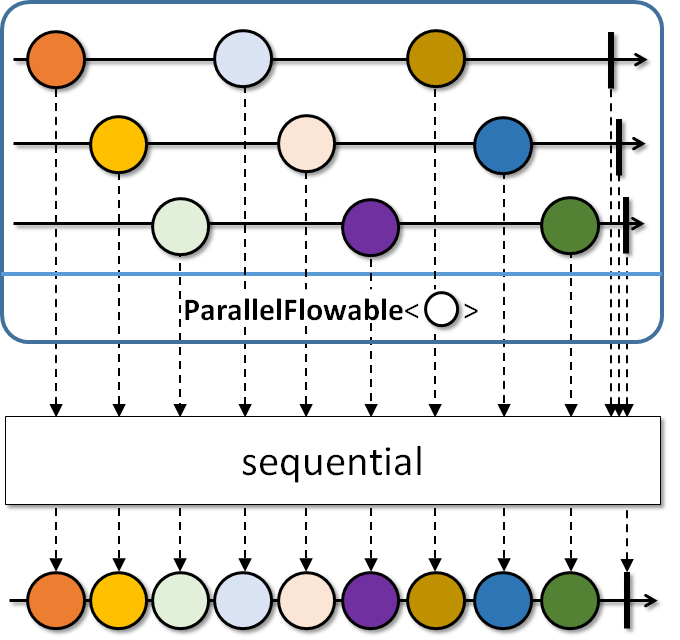

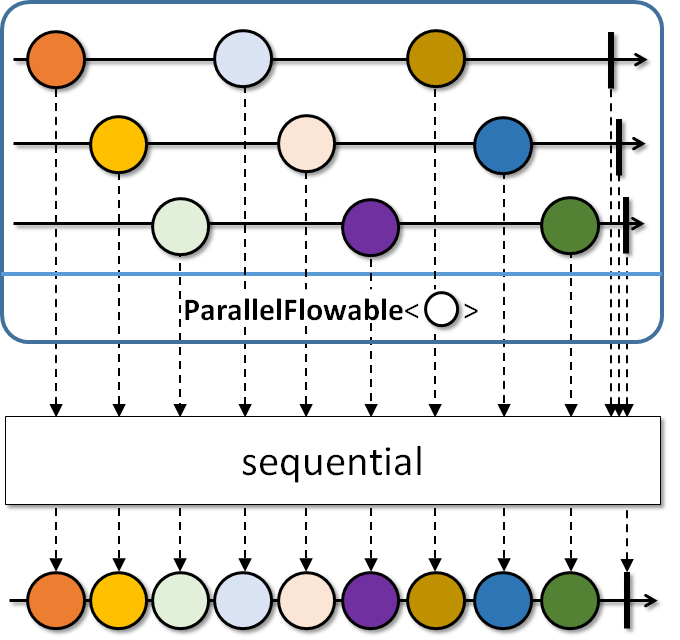

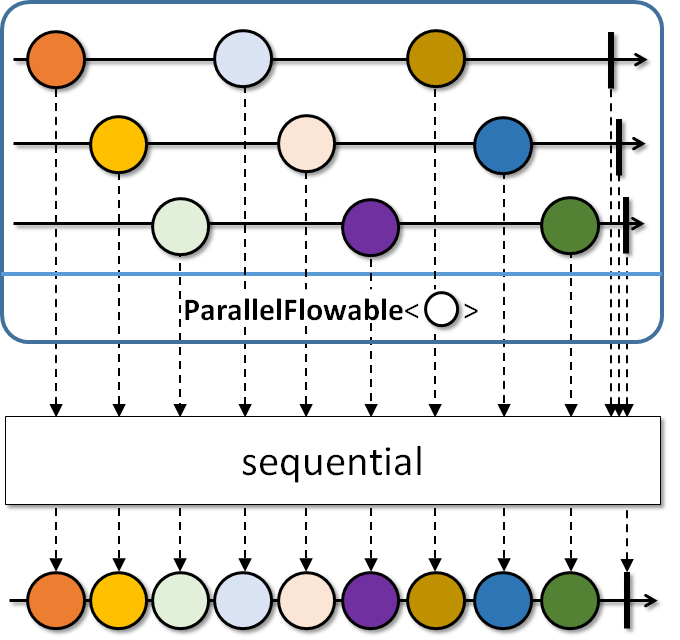

Abstract base class for Parallel publishers that take an array of Subscribers.Use

from()to start processing a regular Publisher in 'rails'. UserunOn()to introduce where each 'rail' should run on thread-vise. Usesequential()to merge the sources back into a single Flowable.History: 2.0.5 - experimental; 2.1 - beta

- Since:

- 2.2

-

-

Constructor Summary

Constructors Constructor and Description ParallelFlowable()

-

Method Summary

All Methods Static Methods Instance Methods Abstract Methods Concrete Methods Modifier and Type Method and Description <C> ParallelFlowable<C>collect(Supplier<? extends C> collectionSupplier, BiConsumer<? super C,? super T> collector)Collect the elements in each rail into a collection supplied via a collectionSupplier and collected into with a collector action, emitting the collection at the end.<U> ParallelFlowable<U>compose(ParallelTransformer<T,U> composer)Allows composing operators, in assembly time, on top of this ParallelFlowable and returns another ParallelFlowable with composed features.<R> ParallelFlowable<R>concatMap(Function<? super T,? extends Publisher<? extends R>> mapper)Generates and concatenates Publishers on each 'rail', signalling errors immediately and generating 2 publishers upfront.<R> ParallelFlowable<R>concatMap(Function<? super T,? extends Publisher<? extends R>> mapper, int prefetch)Generates and concatenates Publishers on each 'rail', signalling errors immediately and using the given prefetch amount for generating Publishers upfront.<R> ParallelFlowable<R>concatMapDelayError(Function<? super T,? extends Publisher<? extends R>> mapper, boolean tillTheEnd)Generates and concatenates Publishers on each 'rail', optionally delaying errors and generating 2 publishers upfront.<R> ParallelFlowable<R>concatMapDelayError(Function<? super T,? extends Publisher<? extends R>> mapper, int prefetch, boolean tillTheEnd)Generates and concatenates Publishers on each 'rail', optionally delaying errors and using the given prefetch amount for generating Publishers upfront.ParallelFlowable<T>doAfterNext(Consumer<? super T> onAfterNext)Call the specified consumer with the current element passing through any 'rail' after it has been delivered to downstream within the rail.ParallelFlowable<T>doAfterTerminated(Action onAfterTerminate)Run the specified Action when a 'rail' completes or signals an error.ParallelFlowable<T>doOnCancel(Action onCancel)Run the specified Action when a 'rail' receives a cancellation.ParallelFlowable<T>doOnComplete(Action onComplete)Run the specified Action when a 'rail' completes.ParallelFlowable<T>doOnError(Consumer<Throwable> onError)Call the specified consumer with the exception passing through any 'rail'.ParallelFlowable<T>doOnNext(Consumer<? super T> onNext)Call the specified consumer with the current element passing through any 'rail'.ParallelFlowable<T>doOnNext(Consumer<? super T> onNext, BiFunction<? super Long,? super Throwable,ParallelFailureHandling> errorHandler)Call the specified consumer with the current element passing through any 'rail' and handles errors based on the returned value by the handler function.ParallelFlowable<T>doOnNext(Consumer<? super T> onNext, ParallelFailureHandling errorHandler)Call the specified consumer with the current element passing through any 'rail' and handles errors based on the givenParallelFailureHandlingenumeration value.ParallelFlowable<T>doOnRequest(LongConsumer onRequest)Call the specified consumer with the request amount if any rail receives a request.ParallelFlowable<T>doOnSubscribe(Consumer<? super Subscription> onSubscribe)Call the specified callback when a 'rail' receives a Subscription from its upstream.ParallelFlowable<T>filter(Predicate<? super T> predicate)Filters the source values on each 'rail'.ParallelFlowable<T>filter(Predicate<? super T> predicate, BiFunction<? super Long,? super Throwable,ParallelFailureHandling> errorHandler)Filters the source values on each 'rail' and handles errors based on the returned value by the handler function.ParallelFlowable<T>filter(Predicate<? super T> predicate, ParallelFailureHandling errorHandler)Filters the source values on each 'rail' and handles errors based on the givenParallelFailureHandlingenumeration value.<R> ParallelFlowable<R>flatMap(Function<? super T,? extends Publisher<? extends R>> mapper)Generates and flattens Publishers on each 'rail'.<R> ParallelFlowable<R>flatMap(Function<? super T,? extends Publisher<? extends R>> mapper, boolean delayError)Generates and flattens Publishers on each 'rail', optionally delaying errors.<R> ParallelFlowable<R>flatMap(Function<? super T,? extends Publisher<? extends R>> mapper, boolean delayError, int maxConcurrency)Generates and flattens Publishers on each 'rail', optionally delaying errors and having a total number of simultaneous subscriptions to the inner Publishers.<R> ParallelFlowable<R>flatMap(Function<? super T,? extends Publisher<? extends R>> mapper, boolean delayError, int maxConcurrency, int prefetch)Generates and flattens Publishers on each 'rail', optionally delaying errors, having a total number of simultaneous subscriptions to the inner Publishers and using the given prefetch amount for the inner Publishers.static <T> ParallelFlowable<T>from(Publisher<? extends T> source)Take a Publisher and prepare to consume it on multiple 'rails' (number of CPUs) in a round-robin fashion.static <T> ParallelFlowable<T>from(Publisher<? extends T> source, int parallelism)Take a Publisher and prepare to consume it on parallelism number of 'rails' in a round-robin fashion.static <T> ParallelFlowable<T>from(Publisher<? extends T> source, int parallelism, int prefetch)Take a Publisher and prepare to consume it on parallelism number of 'rails' , possibly ordered and round-robin fashion and use custom prefetch amount and queue for dealing with the source Publisher's values.static <T> ParallelFlowable<T>fromArray(Publisher<T>... publishers)Wraps multiple Publishers into a ParallelFlowable which runs them in parallel and unordered.<R> ParallelFlowable<R>map(Function<? super T,? extends R> mapper)Maps the source values on each 'rail' to another value.<R> ParallelFlowable<R>map(Function<? super T,? extends R> mapper, BiFunction<? super Long,? super Throwable,ParallelFailureHandling> errorHandler)Maps the source values on each 'rail' to another value and handles errors based on the returned value by the handler function.<R> ParallelFlowable<R>map(Function<? super T,? extends R> mapper, ParallelFailureHandling errorHandler)Maps the source values on each 'rail' to another value and handles errors based on the givenParallelFailureHandlingenumeration value.abstract intparallelism()Returns the number of expected parallel Subscribers.Flowable<T>reduce(BiFunction<T,T,T> reducer)Reduces all values within a 'rail' and across 'rails' with a reducer function into a single sequential value.<R> ParallelFlowable<R>reduce(Supplier<R> initialSupplier, BiFunction<R,? super T,R> reducer)Reduces all values within a 'rail' to a single value (with a possibly different type) via a reducer function that is initialized on each rail from an initialSupplier value.ParallelFlowable<T>runOn(Scheduler scheduler)Specifies where each 'rail' will observe its incoming values with no work-stealing and default prefetch amount.ParallelFlowable<T>runOn(Scheduler scheduler, int prefetch)Specifies where each 'rail' will observe its incoming values with possibly work-stealing and a given prefetch amount.Flowable<T>sequential()Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Publisher sequence, running with a default prefetch value for the rails.Flowable<T>sequential(int prefetch)Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Publisher sequence, running with a give prefetch value for the rails.Flowable<T>sequentialDelayError()Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Flowable sequence, running with a default prefetch value for the rails and delaying errors from all rails till all terminate.Flowable<T>sequentialDelayError(int prefetch)Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Publisher sequence, running with a give prefetch value for the rails and delaying errors from all rails till all terminate.Flowable<T>sorted(Comparator<? super T> comparator)Sorts the 'rails' of this ParallelFlowable and returns a Publisher that sequentially picks the smallest next value from the rails.Flowable<T>sorted(Comparator<? super T> comparator, int capacityHint)Sorts the 'rails' of this ParallelFlowable and returns a Publisher that sequentially picks the smallest next value from the rails.abstract voidsubscribe(Subscriber<? super T>[] subscribers)Subscribes an array of Subscribers to this ParallelFlowable and triggers the execution chain for all 'rails'.<R> Rto(ParallelFlowableConverter<T,R> converter)Calls the specified converter function during assembly time and returns its resulting value.Flowable<List<T>>toSortedList(Comparator<? super T> comparator)Sorts the 'rails' according to the comparator and returns a full sorted list as a Publisher.Flowable<List<T>>toSortedList(Comparator<? super T> comparator, int capacityHint)Sorts the 'rails' according to the comparator and returns a full sorted list as a Publisher.protected booleanvalidate(Subscriber<?>[] subscribers)Validates the number of subscribers and returns true if their number matches the parallelism level of this ParallelFlowable.

-

-

-

Method Detail

-

subscribe

public abstract void subscribe(@NonNull Subscriber<? super T>[] subscribers)

Subscribes an array of Subscribers to this ParallelFlowable and triggers the execution chain for all 'rails'.- Parameters:

subscribers- the subscribers array to run in parallel, the number of items must be equal to the parallelism level of this ParallelFlowable- See Also:

parallelism()

-

parallelism

public abstract int parallelism()

Returns the number of expected parallel Subscribers.- Returns:

- the number of expected parallel Subscribers

-

validate

protected final boolean validate(@NonNull Subscriber<?>[] subscribers)

Validates the number of subscribers and returns true if their number matches the parallelism level of this ParallelFlowable.- Parameters:

subscribers- the array of Subscribers- Returns:

- true if the number of subscribers equals to the parallelism level

-

from

@CheckReturnValue public static <T> ParallelFlowable<T> from(@NonNull Publisher<? extends T> source)

Take a Publisher and prepare to consume it on multiple 'rails' (number of CPUs) in a round-robin fashion.- Type Parameters:

T- the value type- Parameters:

source- the source Publisher- Returns:

- the ParallelFlowable instance

-

from

@CheckReturnValue public static <T> ParallelFlowable<T> from(@NonNull Publisher<? extends T> source, int parallelism)

Take a Publisher and prepare to consume it on parallelism number of 'rails' in a round-robin fashion.- Type Parameters:

T- the value type- Parameters:

source- the source Publisherparallelism- the number of parallel rails- Returns:

- the new ParallelFlowable instance

-

from

@CheckReturnValue @NonNull public static <T> ParallelFlowable<T> from(@NonNull Publisher<? extends T> source, int parallelism, int prefetch)

Take a Publisher and prepare to consume it on parallelism number of 'rails' , possibly ordered and round-robin fashion and use custom prefetch amount and queue for dealing with the source Publisher's values.- Type Parameters:

T- the value type- Parameters:

source- the source Publisherparallelism- the number of parallel railsprefetch- the number of values to prefetch from the source the source until there is a rail ready to process it.- Returns:

- the new ParallelFlowable instance

-

map

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> map(@NonNull Function<? super T,? extends R> mapper)

Maps the source values on each 'rail' to another value.Note that the same mapper function may be called from multiple threads concurrently.

- Type Parameters:

R- the output value type- Parameters:

mapper- the mapper function turning Ts into Us.- Returns:

- the new ParallelFlowable instance

-

map

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> map(@NonNull Function<? super T,? extends R> mapper, @NonNull ParallelFailureHandling errorHandler)

Maps the source values on each 'rail' to another value and handles errors based on the givenParallelFailureHandlingenumeration value.Note that the same mapper function may be called from multiple threads concurrently.

History: 2.0.8 - experimental

- Type Parameters:

R- the output value type- Parameters:

mapper- the mapper function turning Ts into Us.errorHandler- the enumeration that defines how to handle errors thrown from the mapper function- Returns:

- the new ParallelFlowable instance

- Since:

- 2.2

-

map

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> map(@NonNull Function<? super T,? extends R> mapper, @NonNull BiFunction<? super Long,? super Throwable,ParallelFailureHandling> errorHandler)

Maps the source values on each 'rail' to another value and handles errors based on the returned value by the handler function.Note that the same mapper function may be called from multiple threads concurrently.

History: 2.0.8 - experimental

- Type Parameters:

R- the output value type- Parameters:

mapper- the mapper function turning Ts into Us.errorHandler- the function called with the current repeat count and failure Throwable and should return one of theParallelFailureHandlingenumeration values to indicate how to proceed.- Returns:

- the new ParallelFlowable instance

- Since:

- 2.2

-

filter

@CheckReturnValue public final ParallelFlowable<T> filter(@NonNull Predicate<? super T> predicate)

Filters the source values on each 'rail'.Note that the same predicate may be called from multiple threads concurrently.

- Parameters:

predicate- the function returning true to keep a value or false to drop a value- Returns:

- the new ParallelFlowable instance

-

filter

@CheckReturnValue public final ParallelFlowable<T> filter(@NonNull Predicate<? super T> predicate, @NonNull ParallelFailureHandling errorHandler)

Filters the source values on each 'rail' and handles errors based on the givenParallelFailureHandlingenumeration value.Note that the same predicate may be called from multiple threads concurrently.

History: 2.0.8 - experimental

- Parameters:

predicate- the function returning true to keep a value or false to drop a valueerrorHandler- the enumeration that defines how to handle errors thrown from the predicate- Returns:

- the new ParallelFlowable instance

- Since:

- 2.2

-

filter

@CheckReturnValue public final ParallelFlowable<T> filter(@NonNull Predicate<? super T> predicate, @NonNull BiFunction<? super Long,? super Throwable,ParallelFailureHandling> errorHandler)

Filters the source values on each 'rail' and handles errors based on the returned value by the handler function.Note that the same predicate may be called from multiple threads concurrently.

History: 2.0.8 - experimental

- Parameters:

predicate- the function returning true to keep a value or false to drop a valueerrorHandler- the function called with the current repeat count and failure Throwable and should return one of theParallelFailureHandlingenumeration values to indicate how to proceed.- Returns:

- the new ParallelFlowable instance

- Since:

- 2.2

-

runOn

@CheckReturnValue @NonNull public final ParallelFlowable<T> runOn(@NonNull Scheduler scheduler)

Specifies where each 'rail' will observe its incoming values with no work-stealing and default prefetch amount.This operator uses the default prefetch size returned by

Flowable.bufferSize().The operator will call

Scheduler.createWorker()as many times as this ParallelFlowable's parallelism level is.No assumptions are made about the Scheduler's parallelism level, if the Scheduler's parallelism level is lower than the ParallelFlowable's, some rails may end up on the same thread/worker.

This operator doesn't require the Scheduler to be trampolining as it does its own built-in trampolining logic.

- Parameters:

scheduler- the scheduler to use- Returns:

- the new ParallelFlowable instance

-

runOn

@CheckReturnValue @NonNull public final ParallelFlowable<T> runOn(@NonNull Scheduler scheduler, int prefetch)

Specifies where each 'rail' will observe its incoming values with possibly work-stealing and a given prefetch amount.This operator uses the default prefetch size returned by

Flowable.bufferSize().The operator will call

Scheduler.createWorker()as many times as this ParallelFlowable's parallelism level is.No assumptions are made about the Scheduler's parallelism level, if the Scheduler's parallelism level is lower than the ParallelFlowable's, some rails may end up on the same thread/worker.

This operator doesn't require the Scheduler to be trampolining as it does its own built-in trampolining logic.

- Parameters:

scheduler- the scheduler to use that rail's worker has run out of work.prefetch- the number of values to request on each 'rail' from the source- Returns:

- the new ParallelFlowable instance

-

reduce

@CheckReturnValue @NonNull public final Flowable<T> reduce(@NonNull BiFunction<T,T,T> reducer)

Reduces all values within a 'rail' and across 'rails' with a reducer function into a single sequential value.Note that the same reducer function may be called from multiple threads concurrently.

- Parameters:

reducer- the function to reduce two values into one.- Returns:

- the new Flowable instance emitting the reduced value or empty if the ParallelFlowable was empty

-

reduce

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> reduce(@NonNull Supplier<R> initialSupplier, @NonNull BiFunction<R,? super T,R> reducer)

Reduces all values within a 'rail' to a single value (with a possibly different type) via a reducer function that is initialized on each rail from an initialSupplier value.Note that the same mapper function may be called from multiple threads concurrently.

- Type Parameters:

R- the reduced output type- Parameters:

initialSupplier- the supplier for the initial valuereducer- the function to reduce a previous output of reduce (or the initial value supplied) with a current source value.- Returns:

- the new ParallelFlowable instance

-

sequential

@BackpressureSupport(value=FULL) @SchedulerSupport(value="none") @CheckReturnValue public final Flowable<T> sequential()

Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Publisher sequence, running with a default prefetch value for the rails.This operator uses the default prefetch size returned by

Flowable.bufferSize().

- Backpressure:

- The operator honors backpressure.

- Scheduler:

sequentialdoes not operate by default on a particularScheduler.

- Returns:

- the new Flowable instance

- See Also:

sequential(int),sequentialDelayError()

-

sequential

@BackpressureSupport(value=FULL) @SchedulerSupport(value="none") @CheckReturnValue @NonNull public final Flowable<T> sequential(int prefetch)

Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Publisher sequence, running with a give prefetch value for the rails.

- Backpressure:

- The operator honors backpressure.

- Scheduler:

sequentialdoes not operate by default on a particularScheduler.

- Parameters:

prefetch- the prefetch amount to use for each rail- Returns:

- the new Flowable instance

- See Also:

sequential(),sequentialDelayError(int)

-

sequentialDelayError

@BackpressureSupport(value=FULL) @SchedulerSupport(value="none") @CheckReturnValue @NonNull public final Flowable<T> sequentialDelayError()

Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Flowable sequence, running with a default prefetch value for the rails and delaying errors from all rails till all terminate.This operator uses the default prefetch size returned by

Flowable.bufferSize().

- Backpressure:

- The operator honors backpressure.

- Scheduler:

sequentialDelayErrordoes not operate by default on a particularScheduler.

History: 2.0.7 - experimental

- Returns:

- the new Flowable instance

- Since:

- 2.2

- See Also:

sequentialDelayError(int),sequential()

-

sequentialDelayError

@BackpressureSupport(value=FULL) @SchedulerSupport(value="none") @CheckReturnValue @NonNull public final Flowable<T> sequentialDelayError(int prefetch)

Merges the values from each 'rail' in a round-robin or same-order fashion and exposes it as a regular Publisher sequence, running with a give prefetch value for the rails and delaying errors from all rails till all terminate.

- Backpressure:

- The operator honors backpressure.

- Scheduler:

sequentialDelayErrordoes not operate by default on a particularScheduler.

History: 2.0.7 - experimental

- Parameters:

prefetch- the prefetch amount to use for each rail- Returns:

- the new Flowable instance

- Since:

- 2.2

- See Also:

sequential(),sequentialDelayError()

-

sorted

@CheckReturnValue @NonNull public final Flowable<T> sorted(@NonNull Comparator<? super T> comparator)

Sorts the 'rails' of this ParallelFlowable and returns a Publisher that sequentially picks the smallest next value from the rails.This operator requires a finite source ParallelFlowable.

- Parameters:

comparator- the comparator to use- Returns:

- the new Flowable instance

-

sorted

@CheckReturnValue @NonNull public final Flowable<T> sorted(@NonNull Comparator<? super T> comparator, int capacityHint)

Sorts the 'rails' of this ParallelFlowable and returns a Publisher that sequentially picks the smallest next value from the rails.This operator requires a finite source ParallelFlowable.

- Parameters:

comparator- the comparator to usecapacityHint- the expected number of total elements- Returns:

- the new Flowable instance

-

toSortedList

@CheckReturnValue @NonNull public final Flowable<List<T>> toSortedList(@NonNull Comparator<? super T> comparator)

Sorts the 'rails' according to the comparator and returns a full sorted list as a Publisher.This operator requires a finite source ParallelFlowable.

- Parameters:

comparator- the comparator to compare elements- Returns:

- the new Flowable instance

-

toSortedList

@CheckReturnValue @NonNull public final Flowable<List<T>> toSortedList(@NonNull Comparator<? super T> comparator, int capacityHint)

Sorts the 'rails' according to the comparator and returns a full sorted list as a Publisher.This operator requires a finite source ParallelFlowable.

- Parameters:

comparator- the comparator to compare elementscapacityHint- the expected number of total elements- Returns:

- the new Flowable instance

-

doOnNext

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnNext(@NonNull Consumer<? super T> onNext)

Call the specified consumer with the current element passing through any 'rail'.- Parameters:

onNext- the callback- Returns:

- the new ParallelFlowable instance

-

doOnNext

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnNext(@NonNull Consumer<? super T> onNext, @NonNull ParallelFailureHandling errorHandler)

Call the specified consumer with the current element passing through any 'rail' and handles errors based on the givenParallelFailureHandlingenumeration value.History: 2.0.8 - experimental

- Parameters:

onNext- the callbackerrorHandler- the enumeration that defines how to handle errors thrown from the onNext consumer- Returns:

- the new ParallelFlowable instance

- Since:

- 2.2

-

doOnNext

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnNext(@NonNull Consumer<? super T> onNext, @NonNull BiFunction<? super Long,? super Throwable,ParallelFailureHandling> errorHandler)

Call the specified consumer with the current element passing through any 'rail' and handles errors based on the returned value by the handler function.History: 2.0.8 - experimental

- Parameters:

onNext- the callbackerrorHandler- the function called with the current repeat count and failure Throwable and should return one of theParallelFailureHandlingenumeration values to indicate how to proceed.- Returns:

- the new ParallelFlowable instance

- Since:

- 2.2

-

doAfterNext

@CheckReturnValue @NonNull public final ParallelFlowable<T> doAfterNext(@NonNull Consumer<? super T> onAfterNext)

Call the specified consumer with the current element passing through any 'rail' after it has been delivered to downstream within the rail.- Parameters:

onAfterNext- the callback- Returns:

- the new ParallelFlowable instance

-

doOnError

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnError(@NonNull Consumer<Throwable> onError)

Call the specified consumer with the exception passing through any 'rail'.- Parameters:

onError- the callback- Returns:

- the new ParallelFlowable instance

-

doOnComplete

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnComplete(@NonNull Action onComplete)

Run the specified Action when a 'rail' completes.- Parameters:

onComplete- the callback- Returns:

- the new ParallelFlowable instance

-

doAfterTerminated

@CheckReturnValue @NonNull public final ParallelFlowable<T> doAfterTerminated(@NonNull Action onAfterTerminate)

Run the specified Action when a 'rail' completes or signals an error.- Parameters:

onAfterTerminate- the callback- Returns:

- the new ParallelFlowable instance

-

doOnSubscribe

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnSubscribe(@NonNull Consumer<? super Subscription> onSubscribe)

Call the specified callback when a 'rail' receives a Subscription from its upstream.- Parameters:

onSubscribe- the callback- Returns:

- the new ParallelFlowable instance

-

doOnRequest

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnRequest(@NonNull LongConsumer onRequest)

Call the specified consumer with the request amount if any rail receives a request.- Parameters:

onRequest- the callback- Returns:

- the new ParallelFlowable instance

-

doOnCancel

@CheckReturnValue @NonNull public final ParallelFlowable<T> doOnCancel(@NonNull Action onCancel)

Run the specified Action when a 'rail' receives a cancellation.- Parameters:

onCancel- the callback- Returns:

- the new ParallelFlowable instance

-

collect

@CheckReturnValue @NonNull public final <C> ParallelFlowable<C> collect(@NonNull Supplier<? extends C> collectionSupplier, @NonNull BiConsumer<? super C,? super T> collector)

Collect the elements in each rail into a collection supplied via a collectionSupplier and collected into with a collector action, emitting the collection at the end.- Type Parameters:

C- the collection type- Parameters:

collectionSupplier- the supplier of the collection in each railcollector- the collector, taking the per-rail collection and the current item- Returns:

- the new ParallelFlowable instance

-

fromArray

@CheckReturnValue @NonNull public static <T> ParallelFlowable<T> fromArray(@NonNull Publisher<T>... publishers)

Wraps multiple Publishers into a ParallelFlowable which runs them in parallel and unordered.- Type Parameters:

T- the value type- Parameters:

publishers- the array of publishers- Returns:

- the new ParallelFlowable instance

-

to

@CheckReturnValue @NonNull public final <R> R to(@NonNull ParallelFlowableConverter<T,R> converter)

Calls the specified converter function during assembly time and returns its resulting value.This allows fluent conversion to any other type.

History: 2.1.7 - experimental

- Type Parameters:

R- the resulting object type- Parameters:

converter- the function that receives the current ParallelFlowable instance and returns a value- Returns:

- the converted value

- Throws:

NullPointerException- if converter is null- Since:

- 2.2

-

compose

@CheckReturnValue @NonNull public final <U> ParallelFlowable<U> compose(@NonNull ParallelTransformer<T,U> composer)

Allows composing operators, in assembly time, on top of this ParallelFlowable and returns another ParallelFlowable with composed features.- Type Parameters:

U- the output value type- Parameters:

composer- the composer function from ParallelFlowable (this) to another ParallelFlowable- Returns:

- the ParallelFlowable returned by the function

-

flatMap

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> flatMap(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper)

Generates and flattens Publishers on each 'rail'.Errors are not delayed and uses unbounded concurrency along with default inner prefetch.

- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a Publisher- Returns:

- the new ParallelFlowable instance

-

flatMap

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> flatMap(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper, boolean delayError)

Generates and flattens Publishers on each 'rail', optionally delaying errors.It uses unbounded concurrency along with default inner prefetch.

- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a PublisherdelayError- should the errors from the main and the inner sources delayed till everybody terminates?- Returns:

- the new ParallelFlowable instance

-

flatMap

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> flatMap(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper, boolean delayError, int maxConcurrency)

Generates and flattens Publishers on each 'rail', optionally delaying errors and having a total number of simultaneous subscriptions to the inner Publishers.It uses a default inner prefetch.

- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a PublisherdelayError- should the errors from the main and the inner sources delayed till everybody terminates?maxConcurrency- the maximum number of simultaneous subscriptions to the generated inner Publishers- Returns:

- the new ParallelFlowable instance

-

flatMap

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> flatMap(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper, boolean delayError, int maxConcurrency, int prefetch)

Generates and flattens Publishers on each 'rail', optionally delaying errors, having a total number of simultaneous subscriptions to the inner Publishers and using the given prefetch amount for the inner Publishers.- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a PublisherdelayError- should the errors from the main and the inner sources delayed till everybody terminates?maxConcurrency- the maximum number of simultaneous subscriptions to the generated inner Publishersprefetch- the number of items to prefetch from each inner Publisher- Returns:

- the new ParallelFlowable instance

-

concatMap

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> concatMap(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper)

Generates and concatenates Publishers on each 'rail', signalling errors immediately and generating 2 publishers upfront.- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a Publisher source and the inner Publishers (immediate, boundary, end)- Returns:

- the new ParallelFlowable instance

-

concatMap

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> concatMap(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper, int prefetch)

Generates and concatenates Publishers on each 'rail', signalling errors immediately and using the given prefetch amount for generating Publishers upfront.- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a Publisherprefetch- the number of items to prefetch from each inner Publisher source and the inner Publishers (immediate, boundary, end)- Returns:

- the new ParallelFlowable instance

-

concatMapDelayError

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> concatMapDelayError(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper, boolean tillTheEnd)

Generates and concatenates Publishers on each 'rail', optionally delaying errors and generating 2 publishers upfront.- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a PublishertillTheEnd- if true all errors from the upstream and inner Publishers are delayed till all of them terminate, if false, the error is emitted when an inner Publisher terminates. source and the inner Publishers (immediate, boundary, end)- Returns:

- the new ParallelFlowable instance

-

concatMapDelayError

@CheckReturnValue @NonNull public final <R> ParallelFlowable<R> concatMapDelayError(@NonNull Function<? super T,? extends Publisher<? extends R>> mapper, int prefetch, boolean tillTheEnd)

Generates and concatenates Publishers on each 'rail', optionally delaying errors and using the given prefetch amount for generating Publishers upfront.- Type Parameters:

R- the result type- Parameters:

mapper- the function to map each rail's value into a Publisherprefetch- the number of items to prefetch from each inner PublishertillTheEnd- if true all errors from the upstream and inner Publishers are delayed till all of them terminate, if false, the error is emitted when an inner Publisher terminates.- Returns:

- the new ParallelFlowable instance

-

-